🤖 Your Own Devops Agent: The future of observability

Wed 28 May 2025🚀 Introduction¶

All the source code and configurations used in this article are publicly available in this GitHub repository: [https://github.com/matgou/devops-agents] 💻

Google has recently released the Agent Development Kit (ADK), a framework designed to simplify the development and deployment of AI-driven agents. This framework is remarkably user-friendly. In this context, an agent is an interactive API that leverages a Large Language Model (LLM), like Gemini, and is further empowered by your custom scripts to generate responses based on their output.

In the DevSecOps world, we’re already equipped with a multitude of tools and scripts (CLI, API, and more). Now, imagine equipping an AI with all of these capabilities.

As DevOps engineers, we often find ourselves manually reading logs, describing resources to find statuses, and watching events. What if each of us had a dedicated assistant to perform these initial diagnostic steps for you, directly pointing to relevant information?

This article explores how to build and utilize an ADK (Python-based) Kubernetes agent. This agent will act as a diagnostic tool, callable via chat or an API, to quickly gather crucial information about our cluster and provide initial diagnostic insights.

Test it; personally, I am convinced: this is the future of observability.

🛠️ ADK agent developpement quick start¶

Getting started with the Agent Development Kit (ADK) is straightforward. This section will guide you through how to set up and create a (very) basic agent.

Prerequisites¶

- Before you begin, ensure you have Python (including

venvfor virtual environments andpipfor package installation) installed. - You will also need a Google Cloud Platform (GCP) project set up to use Vertex AI for calling the Gemini model.

- Create a folder for your project:

mkdir kubernetes-admin && cd kubernetes-admin - Initialize a virtual environment:

python -m venv venv - Activate the virtual environment:

- On macOS and Linux:

source venv/bin/activate - On Windows:

.\venv\Scripts\activate

- On macOS and Linux:

- Install poetry:

pip install poetry - Initialize the Poetry project within this

kubernetes-admindirectory:

poetry init

Follow the prompts. You can accept defaults for most, but ensure the package name is kubernetes-admin.

* Add the ADK dependency to your project:

poetry add google-adk

- Create a README.md

# Kubernetes-admin

Basic structure¶

- Create the following files and directories within the

kubernetes-admindirectory you created earlier:

- kubernetes_admin/

|- agent.py # Containing agent instructions : initial prompt and parameters

|- __init__.py # A default init file

|- .env

|- tools/ # To hosting futurer tools interfaces

|- __init__.py # A default init file for the tools package

- README.md # existing default README.md file

- pyproject.toml # existing poetry config file

- poetry.lock # existing poetry config file

- venv/ # virtual env directory

- Navigate into the inner

kubernetes_admindirectory (this is where your Python package will reside):

mkdir kubernetes_admin && cd kubernetes_admin

- Populate the

kubernetes_admin/__init__.pyfile. This makes theagentmodule accessible.

# In kubernetes_admin/kubernetes_admin/__init__.py

from . import agent

- The

kubernetes_admin/tools/__init__.pyfile can remain empty for now.

# In kubernetes_admin/kubernetes_admin/tools/

mkdir tools && touch __init__.py

🧠 Giving Agent Instructions¶

The agent.py file (located at kubernetes-admin/kubernetes_admin/agent.py) is crucial in the Agent Development Kit (ADK). It defines the initial prompt and core instructions for the AI agent. Later, it will be expanded to include the specific tools the agent can use and the logic for how it should utilize them.

You can use AI to help generate the initial prompt and instructions, or write them yourself.

Create/edit kubernetes-admin/kubernetes_admin/agent.py with the following content:

# In kubernetes_admin/kubernetes_admin/agent.py

"""Kubernetes_Admin: Agent for interacting with and managing a Kubernetes cluster."""

from google.adk.agents import LlmAgent

MODEL = "gemini-2.5-pro-preview-05-06"

KUBERNETES_ADMIN_INSTRUCTION = """

You are Kubernetes Admin, an AI assistant designed to help users manage and understand their Kubernetes (K8s) clusters.

Your primary goal is to help users by:

- Answering questions about their Kubernetes cluster.

- Retrieving information about resources like pods, nodes, services, deployments, namespaces, etc. using the available tools.

- Explaining Kubernetes concepts relevant to their queries.

- Assisting in understanding resource status and configurations.

Always strive to be clear, concise, and helpful in your responses.

Example interactions:

- User: "How many pods are running in the 'default' namespace?"

- You: (After using a tool to get pod count) "There are X pods currently running in the 'default' namespace."

- User: "What's the status of the pod named 'my-app-pod-123'?"

- You: (After using a tool to get pod status) "The pod 'my-app-pod-123' is currently in a 'Running' state."

"""

kubernetes_admin_agent = LlmAgent(

name="kubernetes_admin_agent",

model=MODEL,

description=(

"An AI agent that helps users explore and understand their Kubernetes cluster by answering questions and retrieving information about resources."

),

instruction=KUBERNETES_ADMIN_INSTRUCTION,

tools=[], # Pass the FunctionTool instance

)

root_agent = kubernetes_admin_agent

Testing agent¶

Before running test, you must configure a cloud-environement :

export GOOGLE_GENAI_USE_VERTEXAI=true

export GOOGLE_CLOUD_PROJECT=<Complete with your GCP project ID>

export GOOGLE_CLOUD_LOCATION=us-central1

export GOOGLE_CLOUD_STORAGE_BUCKET=${GOOGLE_CLOUD_PROJECT}-adk-bucket-$(openssl rand -hex 4) # generate a unique bucket name

gcloud auth application-default login

gcloud storage buckets create gs://$GOOGLE_CLOUD_STORAGE_BUCKET

Now you can run test with the following command (in kubernetes-admin/kubernetes_admin/ directory):

adk run .

If everything is configured correctly, you will see the following prompt, and you can interact with it (use Ctrl+D to exit):

Log setup complete: /tmp/agents_log/agent.20250528_200909.log

To access latest log: tail -F /tmp/agents_log/agent.latest.log

Running agent kubernetes_admin_agent, type exit to exit.

[user]: hello

[kubernetes_admin_agent]: Hello! I'm Kubernetes Admin, your AI assistant for managing and understanding your Kubernetes cluster.

How can I help you today? For example, you can ask me about pods, nodes, services, or any other Kubernetes resources.

[user]: how many pod in my kubernetes clusters

[kubernetes_admin_agent]: I can help you with that! To tell you how many pods are in your cluster, I need to check all namespaces.

Should I proceed to get the list of all pods across all namespaces in your cluster?

Giving tools to my agent¶

First, let’s write a Python function to interact with kubectl and wrap it as an ADK tool.

- Create/edit

kubernetes-admin/kubernetes_admin/tools/kubectl_tool.pywith the following content:

# In kubernetes_admin/kubernetes_admin/tools/kubectl_tool.py

"""Tool for executing kubectl get commands."""

import subprocess # For actual kubectl calls

from google.adk.tools import FunctionTool

def run_kubectl(verbs: str = "get", resource_type: str = "pods", resource_name: str = "", namespace: str = "default") -> str:

"""

Runs a kubectl command with the specified verb, resource type, optional resource name, and namespace.

Exemples:

To get configuration of a specific pod use verbs: "describe", namespace: "all", resource_type: "pods", resource_name: "<custom pod name>"

To get logs of a specific pod use verbs: "logs", namespace: "<namespace>", resource_type: "", resource_name: "<custom pod name>"

To list pods in all namespace use verbs: "get", namespace: "all", resource_type: "pods", resource_name: ""

Args:

verbs: The kubectl verb to use (e.g., 'get', 'describe', 'logs', 'top').

resource_type: The type of Kubernetes resource (e.g., 'pods', 'nodes', 'services', 'deployments').

resource_name: Optional. The specific name of the resource. If None, lists all resources of the type.

namespace: Optional. The Kubernetes namespace. Defaults to 'default'.

Returns:

A string containing the output of the kubectl command or an error message.

"""

try:

command = ["kubectl", verbs]

if resource_name != "":

command.append(f'{resource_type}/{resource_name}')

else:

command.append(f'{resource_type}')

if namespace != "all":

command.extend(["-n", namespace])

else:

command.extend(["--all-namespaces"])

result = subprocess.run(command, capture_output=True, text=True, check=True, timeout=30)

return result.stdout

except FileNotFoundError:

return "Error: kubectl command not found. Please ensure kubectl is installed and in your PATH."

except subprocess.CalledProcessError as e:

return f"Error executing kubectl command: {e.stderr}"

except subprocess.TimeoutExpired:

return "Error: kubectl command timed out."

except Exception as e:

return f"An unexpected error occurred: {str(e)}"

kubectl_tool = FunctionTool(

func=run_kubectl)

- Now, let’s update

kubernetes-admin/kubernetes_admin/agent.pyto include this new tool.

from .tools.kubectl_tool import kubectl_tool # Import the FunctionTool instance

...

...

kubernetes_admin_agent = LlmAgent(

name="kubernetes_admin_agent",

model=MODEL,

description=(

"An AI agent that helps users explore and understand their Kubernetes cluster by answering questions and retrieving information about resources."

),

instruction=KUBERNETES_ADMIN_INSTRUCTION,

tools=[kubectl_tool], # Pass the FunctionTool instance

)

- Update the agent’s instructions within KUBERNETES_ADMIN_INSTRUCTION always in

kubernetes-admin/kubernetes_admin/agent.pyto inform the LLM about the new tool and how to use it:

KUBERNETES_ADMIN_INSTRUCTION = """

...

...

You have a tool named 'run_kubectl_command' to fetch information from the Kubernetes cluster by executing kubectl commands.

Tool 'run_kubectl_command' arguments:

- 'verbs': (string, required) The kubectl verb to use (e.g., 'get', 'describe', 'logs', 'top').

- 'resource_type': (string, required) The type of Kubernetes resource (e.g., 'pods', 'nodes', 'services', 'deployments', 'namespaces').

- 'resource_name': (string, optional) The specific name of the resource. If not provided, all resources of the given type in the namespace will be listed.

- 'namespace': (string, optional, defaults to 'default') The Kubernetes namespace to query.

When a user asks for information that requires querying the cluster (e.g., "list pods", "get node status", "describe service my-service", "get logs for pod xyz"), you MUST use the 'run_kubectl_command' tool.

Clearly state that you are using the tool, what you are querying for, and then present the information returned by the tool.

Determine the correct 'verbs' argument based on the user's request (e.g., "list" or "how many" implies 'get', "what's the status" implies 'get', "describe" implies 'describe', "show logs" implies 'logs').

"""

And now real testing¶

Now, let’s perform a real test to see if our agent uses kubectl as expected. (Ensure you have a valid kubeconfig file and are connected to a Kubernetes cluster for this test.)

# In kubernetes-admin/kubernetes_admin/ directory

$ adk run .

...

[user]: how many pod in my cluster all namespaces

[kubernetes_admin_agent]: Okay, I can help you with that. I will use the `run_kubectl` tool to list all pods in all namespaces and then count them for you.

[kubernetes_admin_agent]: I have retrieved the list of all pods across all namespaces. There are a lot of them!

Based on the output, there are 119 pods in your cluster across all namespaces.

Kubernetes integration¶

We will now deploy our agent into a kubernetes cluster, to give possibility to other user to use it.

🐳 Set up uvicorn¶

Expose the web interface by setting up Uvicorn and adding a main.py file.

# In kubernetes-admin/main.py

import os

import uvicorn

from fastapi import FastAPI

from google.adk.cli.fast_api import get_fast_api_app

# Get the directory where main.py is located

AGENT_DIR = os.path.dirname(os.path.abspath(__file__))

# Example session DB URL (e.g., SQLite)

SESSION_DB_URL = "sqlite:///./sessions.db"

# Example allowed origins for CORS

ALLOWED_ORIGINS = ["http://localhost", "http://localhost:8080", "*"]

# Set web=True if you intend to serve a web interface, False otherwise

SERVE_WEB_INTERFACE = True

# Call the function to get the FastAPI app instance

app: FastAPI = get_fast_api_app(

agents_dir=AGENT_DIR,

session_db_url=SESSION_DB_URL,

allow_origins=ALLOWED_ORIGINS,

web=SERVE_WEB_INTERFACE,

)

if __name__ == "__main__":

# Use the PORT environment variable provided by Cloud Run, defaulting to 8080

uvicorn.run(app, host="0.0.0.0", port=int(os.environ.get("PORT", 8080)))

🐳 Build a docker container¶

-

Here’s a Dockerfile to build a container image for your Python agent. This Dockerfile:

-

Uses a slim Python base image.

-

Installs

kubectl(as your agent’s tool uses it) and Poetry. -

Sets up a non-root user for better security.

-

Installs your project dependencies using Poetry.

-

Sets the default command to run your ADK agent using its built-in HTTP server.

-

# In kubernetes_admin/Dockerfile

FROM python:3.13-slim

WORKDIR /app

RUN pip install poetry

ENV PORT=8080

RUN apt update && apt install -y kubernetes-client/stable prometheus/stable && apt clean

COPY . .

RUN adduser --disabled-password --gecos "" myuser && \

chown -R myuser:myuser /app

USER myuser

RUN poetry install

ENV PATH="/home/myuser/.local/bin:$PATH"

CMD poetry run uvicorn main:app --host 0.0.0.0 --port $PORT

- (One-time setup) Create an Artifact Registry repository if you haven’t already.

# Replace REGION and GOOGLE_CLOUD_PROJECT with your details.

gcloud artifacts repositories create adk-repo --repository-format=docker \

--location=us-central1 \

--description="ADK agent repository" \

--project=${GOOGLE_CLOUD_PROJECT}

- Build the Docker image and push it to Artifact Registry.

# Replace REGION and GOOGLE_CLOUD_PROJECT with your details.

gcloud builds submit --tag us-central1-docker.pkg.dev/${GOOGLE_CLOUD_PROJECT}/adk-repo/k8s-admin-agent:latest

🛡️ Add RBAC¶

The following manifest creates a Kubernetes ServiceAccount in the kubernetes-admin-agent namespace. It also defines a ClusterRole with read-only permissions (get, list, watch) across all API groups and resources, and then uses a ClusterRoleBinding to grant these permissions to the created ServiceAccount.

# In kubernetes_admin/rbac.yaml file

apiVersion: v1

kind: ServiceAccount

metadata:

name: kubernetes-admin-agent

---

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-admin-agent

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kubernetes-admin-agent

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: kubernetes-admin-agent

rules:

- apiGroups:

- "*"

resources:

- "*"

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

# This cluster role binding allows the kubernetes-admin-agent ServiceAccount to read all resources in any namespace.

kind: ClusterRoleBinding

metadata:

name: kubernetes-admin-agent-global

subjects:

- kind: ServiceAccount

name: kubernetes-admin-agent

namespace: kubernetes-admin-agent

roleRef:

kind: ClusterRole

name: kubernetes-admin-agent

apiGroup: rbac.authorization.k8s.io

Apply this configuration :

kubectl apply -f kubernetes_admin/rbac.yaml

Add secret for google credentials¶

To allow your agent running in Kubernetes to authenticate with Google Cloud services (specifically Vertex AI for Gemini), you need to create a GCP service account, grant it the necessary permissions, generate a key for it, and store this key as a Kubernetes secret.

Make sure your GOOGLE_CLOUD_PROJECT environment variable is set correctly before running these commands.

# 1. Create the service account

gcloud iam service-accounts create kubernetes-admin-agent

# 2. Grant the service account the "Vertex AI User" role on your project

gcloud projects add-iam-policy-binding ${GOOGLE_CLOUD_PROJECT} \

--member="serviceAccount:kubernetes-admin-agent@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com" \

--role="roles/aiplatform.user"

# 3. Create and download a key for the service account

gcloud iam service-accounts keys create key.json \

--iam-account="kubernetes-admin-agent@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com"

# 4. Create a Kubernetes secret from the downloaded key file

kubectl create secret generic google-cloud-keys -n kubernetes-admin-agent --from-file=key.json=key.json

Deploy a container for our agent and expose it as service¶

Let’s now create the Kubernetes deployment and service manifest for your agent. This file, kubernetes_admin/deployment.yaml, will define how your agent runs within your Kubernetes cluster.

It’s really important to remember to replace the placeholder <Complete with your GCP project ID> with your actual Google Cloud Project ID in two places within this file:

* Under spec.template.spec.containers[0].image for the Docker image path.

* Under spec.template.spec.containers[0].env for the GOOGLE_CLOUD_PROJECT environment variable.

# In kubernetes_admin/deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-admin-agent

spec:

replicas: 1

selector:

matchLabels:

app: kubernetes-admin-agent

template:

metadata:

labels:

app: kubernetes-admin-agent

spec:

serviceAccountName: kubernetes-admin-agent

volumes:

- name: google-cloud-keys

secret:

secretName: google-cloud-keys

containers:

- name: kubernetes-admin-agent

imagePullPolicy: Always

image: us-central1-docker.pkg.dev/<Complete with your GCP project ID>/adk-repo/k8s-admin-agent:latest

resources:

limits:

memory: "1Gi"

cpu: "500m"

ephemeral-storage: "128Mi"

requests:

memory: "128Mi"

cpu: "500m"

ephemeral-storage: "128Mi"

ports:

- containerPort: 8080

volumeMounts:

- name: google-cloud-keys

readOnly: true

mountPath: "/etc/google-cloud-keys"

env:

- name: PORT

value: "8080"

- name: GOOGLE_CLOUD_PROJECT

value: <Complete with your GCP project ID>

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /etc/google-cloud-keys/key.json

- name: GOOGLE_CLOUD_LOCATION

value: us-central1

- name: GOOGLE_GENAI_USE_VERTEXAI

value: "true"

# If using AI Studio, set GOOGLE_GENAI_USE_VERTEXAI to false and set the following:

# - name: GOOGLE_API_KEY

# value: GOOGLE_API_KEY

# Add any other necessary environment variables your agent might need

---

apiVersion: v1

kind: Service

metadata:

name: kubernetes-admin-agent

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

selector:

app: kubernetes-admin-agent

Apply now this configuration :

kubectl apply -n kubernetes-admin-agent -f deployment.yaml

Find external ip of our agent, and go to http://<EXTERNAL_IP_ADDRESS>

kubectl get svc -n kubernetes-admin-agent

⚠️ It’s very important to note that for this demonstration, we have exposed the agent to the internet. However, in a real-world scenario, we would implement authentication in front of it or expose it only internally.

🎉 Testing and experimentings¶

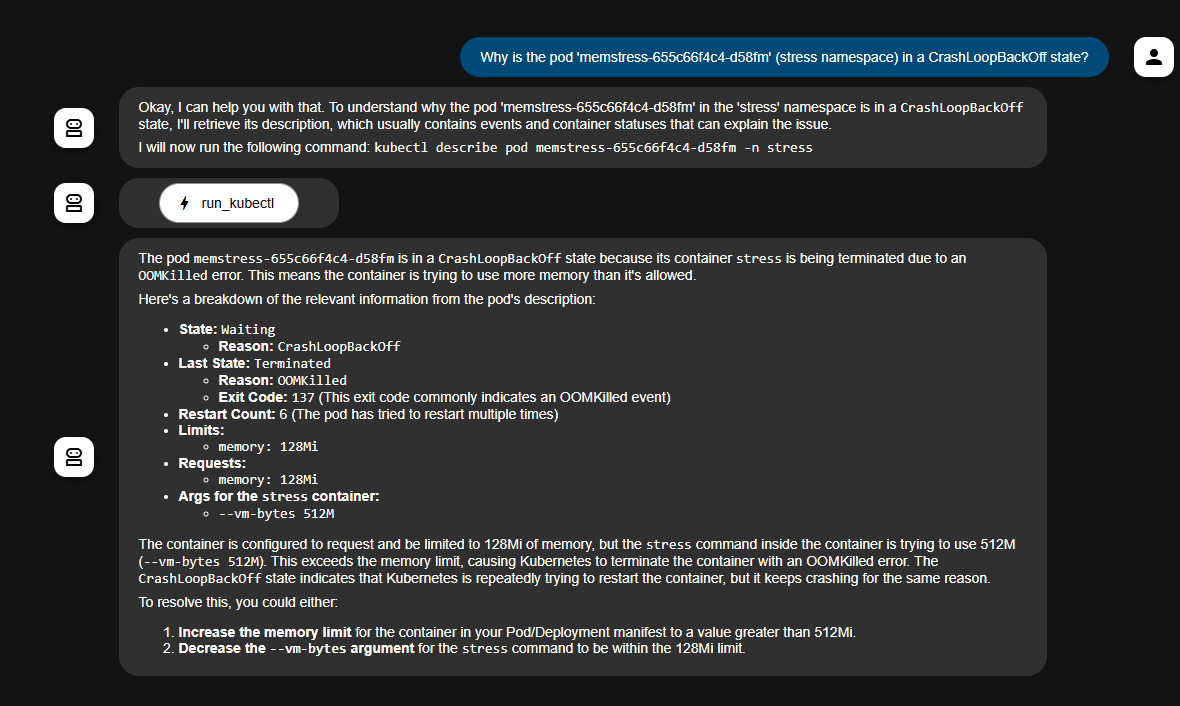

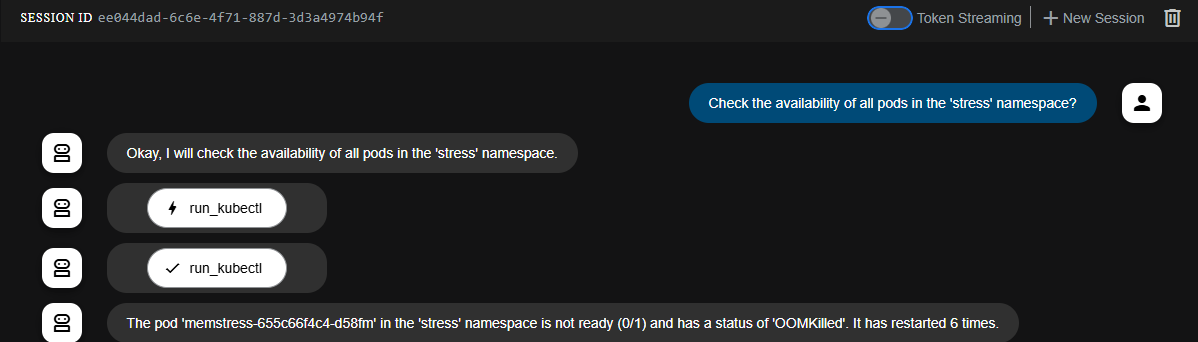

We can conduct many tests to experiment with our agent, asking it increasingly complex questions about concepts like “Why isn’t the ingress working?” or “Why is this pod not running?”. However, for this article, I’ve chosen to demonstrate how easily the agent can help detect and understand an OOMKill event. I won’t detail the setup of a “stress” pod used to intentionally generate an OOMKill in my cluster, but let’s observe the interaction with our agent.

Diagnose OOMKill¶

The agent can be particularly helpful in diagnosing common Kubernetes issues. For instance, if you suspect a pod is being OOMKilled (Out Of Memory Killed), you could ask:

Conclusion and prospective¶

In this article, we’ve journeyed through the process of building and deploying a practical AI-driven Kubernetes agent using Google’s Agent Development Kit. We started with the basics of ADK, created an agent capable of understanding natural language, and then empowered it with a kubectl tool to interact directly with our cluster. We also covered the essential steps to containerize this agent, secure its access with RBAC, and deploy it as a scalable service within Kubernetes.

The agent we built serves as a foundational example, a diagnostic assistant that can quickly fetch information and provide initial insights. But this is just the tip of the iceberg. Imagine extending this agent with more sophisticated tools: tools that can not only read logs but also correlate events across different components, tools that can analyze resource utilization patterns and predict potential issues, or even tools that can safely execute pre-approved operational tasks based on observed conditions.

The future of observability might not just be about better dashboards and more data, but about intelligent assistants that actively help us understand and manage the increasing complexity of our cloud-native environments. By leveraging AI and frameworks like ADK, we are stepping towards a future where our Kubernetes clusters are not just monitored, but truly understood and intelligently operated, freeing up human engineers to focus on higher-level strategic initiatives. The potential to transform how we interact with and maintain our infrastructure is immense, and the journey has just begun.

Cloud Ops Chronicles

Cloud Ops Chronicles